Bots and Interactive AI: Modern Inclusive Classrooms of Tomorrow

How can collaborative PD methodologies be adapted for individual visual impairment to empower stakeholders in the design process of an inclusive AR/MR learning solution?

“Technologies for blind children, carefully tailored through user-centered design approaches, can make a significant contribution to cognitive development of these children” (Sanchez, 2008)

What did the project involve?

The project’s initial research questions to explore were within the scope of developing cross modal interactive technology to promote inclusive learning via a participatory design process (PD). The three main research questions were:

- How can collaborative PD methodologies be adapted for individual visual impairment to empower stakeholders in the design process of an inclusive AR/MR learning solution?

- Can iterative user evaluation be successfully coupled with PD to improve and evaluate inclusion and/or user engagement for learning solutions?

- Can a tangible relational database be implemented to allow the visually impaired to tangibly create and organise notes via an interactive voice interface?

The project was conducted in three main stages:

Stage I

Create an ecosystem of Bot’s, AI and conversational interface within the Amazon Alexa device to assist students and teachers; e.g. Bots for narrating, storytelling, quizzing, mnemonics, mind mapping and possibly cognitive exploration via the IBM Watson cognitive API.

Stage II

Having created a minimum viable product, Stage II involved the development of an interactive table top surface to extend Stage I into an AR/MR experiences, considering alternative sensory modalities such as smell, haptic, visual and implicit to enrich the capabilities of the Bots.

Stage III

Having created the foundational structure via previous stages, stage III sought to implement the project’s key research question; a tangible relational database that utilizes the available sensory modalities as unique id’s to relationships. This allowed for visually impaired children to take, organize and explore personal corpi enabled by the tangible table top, communicated via the voice interface and with the help of the Bots.

Who are the team and what do they bring?

- Oussama Metatla (Computer Science, University of Bristol) is a researcher who’s interests include investigating multisensory technology and designing with and for people with visual impairments. He has led a project focusing on inclusive educational technology for mixed-ability groups in mainstream schools

- Alison Oldfield (Education, University of Bristol) is a specialist in learning technology and society and special and inclusive education pathways. Her research interests include the areas of digital technologies, children and young people, and inclusion. Her previous professional experience is as practitioner and researcher in education, youth work, digital technologies and outdoor learning.

- Marina Gall (Education, University of Bristol) is an expert on music education. She has been researching the use of technologies for Music making, in formal and informal educational settings , mainly for young people. She has conducted research on how new technologies can assist disabled young people to make music together.

- Clare Cullen (Computer Science, University of Bristol) is a researcher focused on the use of music, digital media and multisensory technology to promote learning and inclusion. She has a background in music psychology and media and arts technology.

- Taimur Ahmed (Computer Science, University of Bristol) is a researcher with interests in inclusion, conversational interfaces, TEL and the implementation of AI/Bots. He has a professional background in political advisory (UK) and financial management consulting for the Energy Sector.

What were the results?

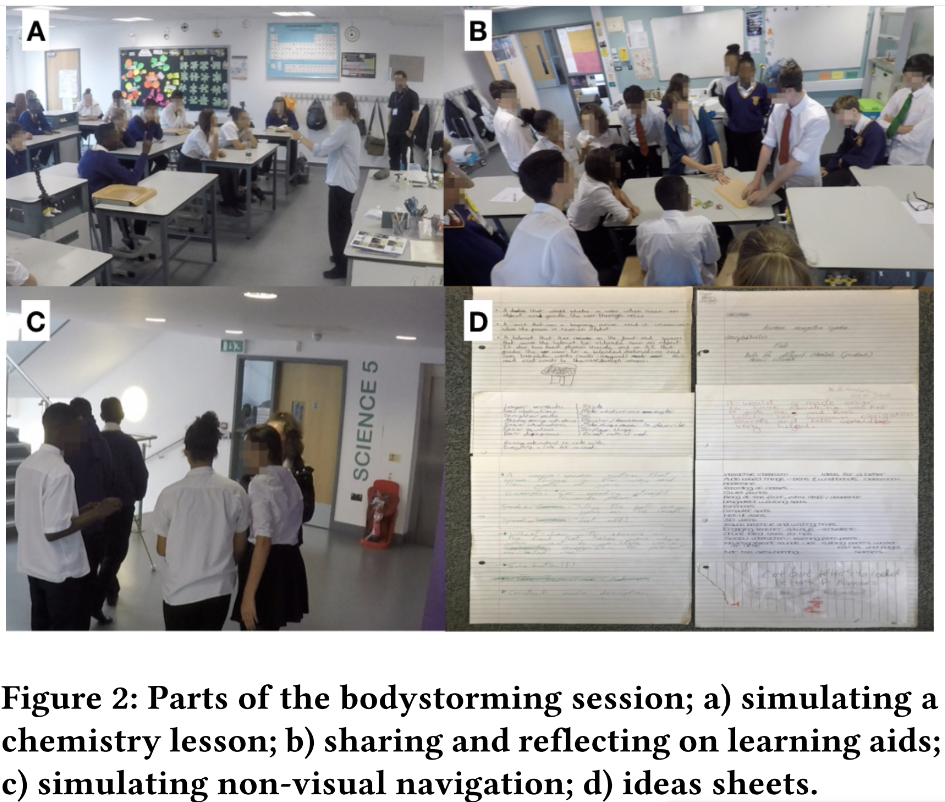

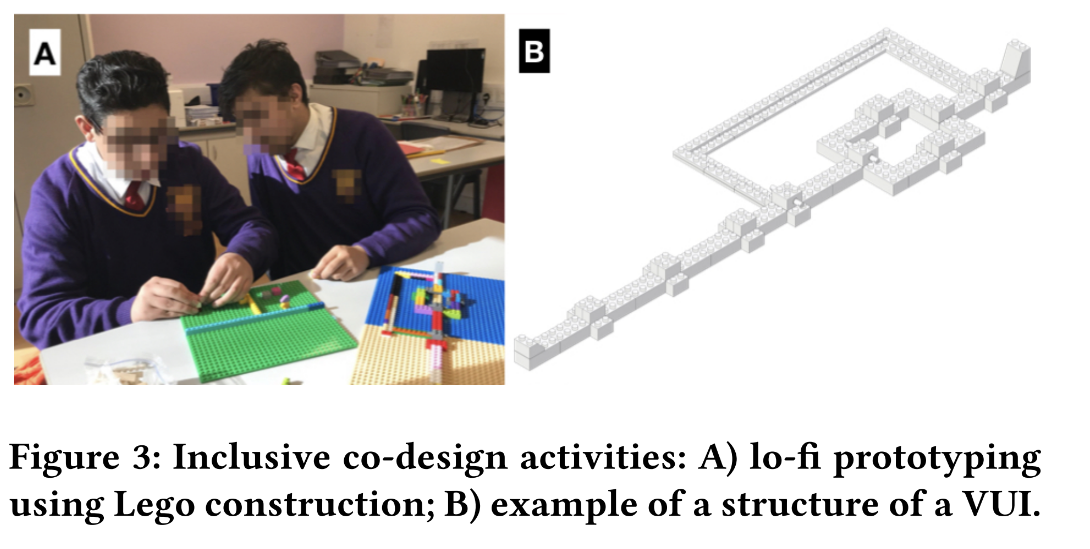

Following the project, the team co-wrote a paper titled ‘Voice User Interfaces in Schools: Co-designing for Inclusion With Visually-Impaired and Sighted Pupils’ this paper outlines the process and outcomes of the project. The project was based on inclusive co-design which involved focus group discussions followed by ‘in-school bodystorming’. These processes provided the team with many insights about the challenges of inclusive education and suggestions about how voice technology might help address them. This allowed them to construct three scenarios inspired by their engagement with educators and pupils, showing how VUIs may be used in schools to promote inclusive education. Finally, the team ran three inclusive co-design workshops in schools to engage participants in validating a scenario by designing a VUI application that exemplifies it.

Following the project, Oussama Metatla was awarded a considerable grant for public engagement as part of EPSRC’s Telling Tales of Engagement Competition.