Difficult Duets

When musicians are creating in dialogue with machines how can humans solve problems pre-emptively? How do we cope in the moment? How do we recover? What new ideas emerge from the irreconcilable?

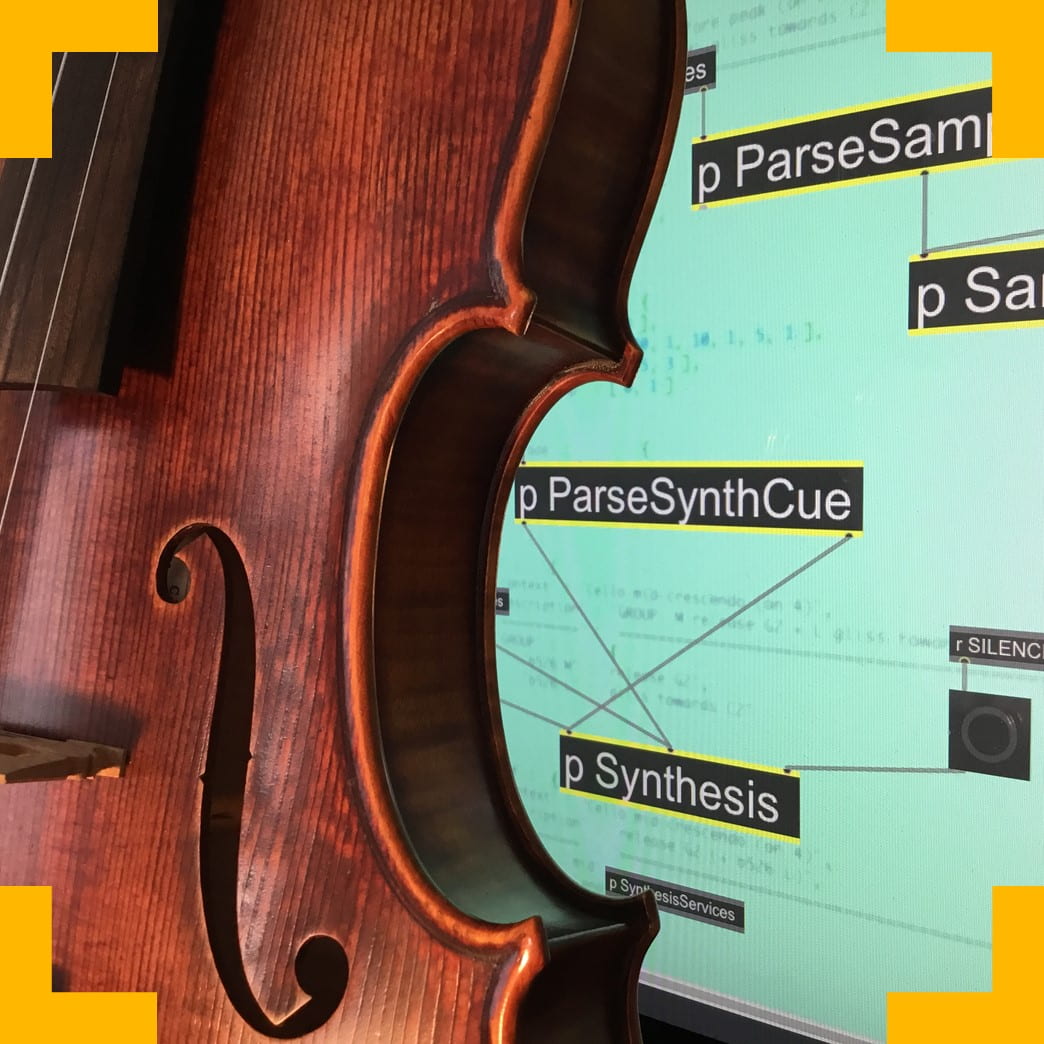

Digital technology is enabling new modes of live performance. ‘New interfaces for musical expression’ emerged as a distinct field c.2001 from research in human-computer interaction (HCI). Many NIME instruments are intended as musical enablers for non-specialists. However, when expert music making is envisaged, there is an unresolved discourse around how ‘newness’ militates against musical ‘depth’ (contrast with the violin, whose long history has resulted in cumulative aesthetic, technical, and expressive capacity). Creative practices such as live coding bring new aesthetic possibilities, but also problems such as the ‘illegibility’ of the performance act (‘are they just checking email?’). There is a growing repertoire of concert music that combines the stage presence of classical players with new electronic soundworlds. Computer and AI-assisted tools play many roles in the composition process. In performance time, however, the tendency is to focus on practical issues – on ‘making it work’ – both in the technological platforms, and in the human performers ceding their interpretive agency.

Ensemble performers have to deal with other actors and agents. The motivation to make those agents electronic was for the unique sonic possibilities that acoustic instruments cannot match: but then the technology cannot be assumed to behave like another human. The researchers began to wonder what if they went further, and actively invited a challenging relationship?

Professional musicians rarely seek dispassionate distance (as might a surgeon, say, conducting robotic surgery), nor is their emotional immersion private (as it might be for a computer gamer). When they play fully scored music, it is invested with a complexity of musical thought that has taken a composer time to create. The performer negotiates a complex multi-layered territory. To captivate their audience, they put their expressive and technical, intellectual and emotional skills on the line

Professional performers are trained to ‘not fail’. Yet, in the film or opera or novel, it is often a situation of extreme jeopardy that is character-defining and emotionally captivating. This project sought to take that out of the fictive and into our real interaction world, pushing the technology to push the humans, to test what happens at the point of breakdown.

What did the project involve?

This project was about the experience of humans interacting with new technology. More particularly, it investigated the experience of complex, real-time, intellectually and emotionally demanding interactions, where the technology seems to have a will of its own; and where the relationship is pushed through thresholds of challenge, discomfort, and breakdown. With such intimate but non-human interlocutors – how can humans solve problems preemptively? How do we cope in the moment? How do we recover? What new ideas emerge from the irreconcilable? In the future, how might we live well when our machines resist us?

By focusing on a particular domain – contemporary art concert music, combining human players and ‘live electronics’ – and the experience of elite classical professional musicians, the researchers sought to use their expertise as a high-resolution microscope into the human-machine interaction. To question what they were seeing, they combined diverse academic disciplines. They made a series of composed test pieces, investigated the performers’ experience of playing them in workshop, documented and discussed that experience, and fed it iteratively into further making.

The primary investigator, Neal Farwell, had previously made a series of concert works that emphasized a ‘chamber music’ relationship between human player and electronic sound: but this was usually been mediated by a human operator. From talking to many musicians, and contact with current work by other composers, he identified a new aesthetic space for composing. It was characterized on one side by the discipline and organizational richness of fully scored music, with its layered invitation to expressive musicianship; on the other, by a letting-go of human mediation, replaced by electronic agency and its strangeness and challenge to the players.

This project found it artistically compelling to say: how far can this go? – and intellectually compelling to ask: what do we learn beyond the art-making?

This questions were explored with a three part structure:

1) Discussion

This consisted of a half-day colloquium with all members of the project. This was done in person and virtually where needed. The researchers questioned what do they already know? What are they learning? What is next?

2) Provocation

The next step involved ‘off-line’ making that absorbed the findings from the discussion. This resulted in the creation of a set of short test pieces (scores and software), each targeted as a special challenge to one performer – and then given to all the performers.

3) Experience

Next the researchers moved onto making the performances, in private workshops. The lead composer, Farwell, collaborated with each of the classical musicians. The researchers tested performances and made adjustments to the computer agents. In this process the project involved video documentation, verbal discussion, and written accounts. Finally, the project returned to step A. The researchers sought to ‘travel twice round the loop, beginning and ending at A’.

Who are the team and what do they bring?

- Neal Farwell (Music, University of Bristol) composes music for instruments and voices, for the “acousmatic” fixed medium, and for the meeting points of human players and live electronics. His work is commissioned by notable performers and performed internationally.

- Mieko Kanno is an outstanding violinist who has worked with many contemporary composers, as soloist and in top-flight ensembles. She owns members of the rare Violin Octet. She is also an academic who has published on questions of musical human-computer interaction and, as Director of the Centre for Artistic Research, Uniarts Helsinki, has wide-ranging insight into the research dimensions of artistic creation.

- Ulrich Mertin is a viola player, conductor and experimental composer of wide-ranging tastes and expertise, from folk to avant garde. He has performed with many of Europe’s premier contemporary music ensembles and worked with some of the most significant composers of recent decades. Based in Istanbul, he is co-director of Hezarfen ensemble, and deeply engaged in investigation of trans-cultural musical practices.

- Sinead Hayes is a classical violinist, an expert Irish fiddler, and has a growing profile as a conductor. Her roles include conductor of Belfast’s Hard Rain Soloist Ensemble, Northern Ireland’s only professional new music ensemble; and assistant conductor and chorus director for Irish National Opera.

- Harriet Wiltshire is a period- and modern-instrument cellist, soloist, member of prestige UK ensembles including the Orchestra of the Age of Enlightenment and the Royal Philharmonic Orchestra, and session musician recording with prominent rock and pop artists.

- Fiona Jordan (Anthropology and Archaeology, University of Bristol) is a specialist in evolutionary and linguistic anthropology, she sees potential in a nascent ‘anthropology of AI’, and is particularly interested to explore the language our musicians use to talk about their experiences and characterize their computer partners.

- Josh Habgood-Coote (Philosophy, University of Bristol) works primarily in epistemology, with a focus on the collective production of knowledge, and on the nature of knowledge-how. He is particularly interested in the co-production of knowledge by interdisciplinary groups, and in the challenges involved in this kind of research.

- Genevieve Liveley (Classics and Ancient History, University of Bristol) works on narratives and narrative theories both ancient and modern. An acute focus of her research has been developing her narratologically inflected research into artificial humans and AI.

- Pete Bennett (Computer Science, University of Bristol) is a designer, musician, academic, artist and creative technologist. His research interests include Mobile and Wearable Computing, Tangible User Interfaces, Musical Instrument Design and Design Theory.

- Dan Bennett (Computer Science, University of Bristol) is a researcher in HCI at the Bristol Interaction Group, and works on human autonomy and agency in interaction with computational systems. He also has an interest in generative music systems.

What were the results?

The direct outputs were a series of short musical test pieces (scores and software materials). A series of performances, in workshop. The creation of a project website and blog. Questions, methods, challenges, co- and auto-ethnographic narrative. Music and video examples. And scoping plans for future projects and funding.

Neal Farwell gave a paper at the 2020 Society for Electro-Acoustic Music in the United States conference, 12-14 March 2020, University of Virginia.

Mieko Kanno gave the premiere of the concert work that came out of this project – in its violin form – at the Bristol New Music international festival (24 April 2020).