∆3 : Reading the Human through ∆rtificial, ∆ugmented and ∆rtistic Intelligence

What perceptions do robots have of humans? What does it mean for us to live in a world increasingly inhabited by artificial and augmented intelligence? This project aims to further develop a network that crosses artistic, scientific and engineering disciplines to explore how machines see us.

What did the project involve?

With increasing public attention to how and whether we might trust intelligent robots in future societies, this project offered an intriguing and playful way to flip this question by imagining how robots might regard us. What implications do machines that see have for our privacy? What are the ethics of data commercialisation in a world where, when we look at robots, our gaze is returned?

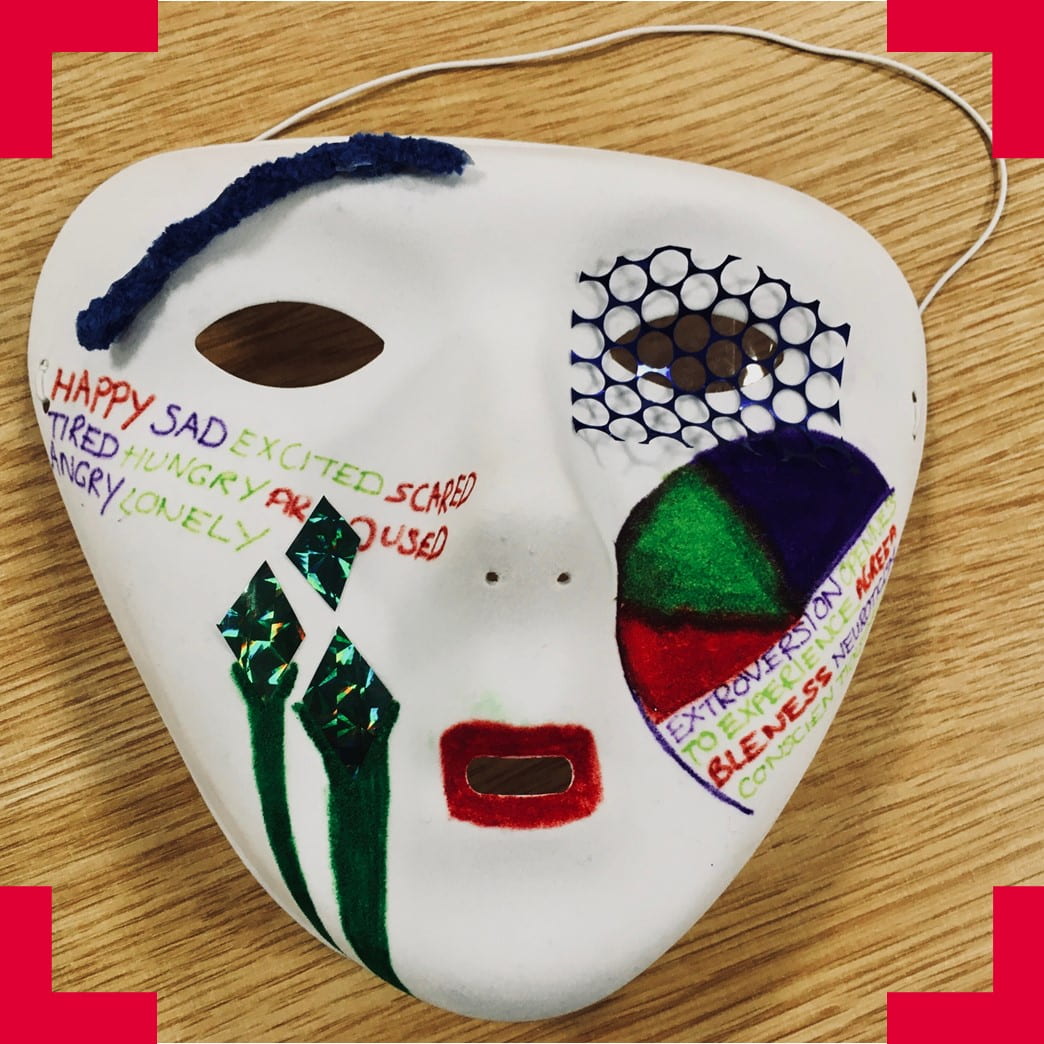

∆3 was an arts/engineering collaborative network that explored artificial and augmented intelligence through artistic research. In this project, the network used a series of arts-based workshops to experiment, examine and play together. They shared ideas, cultural reference points and a process of critical making to playfully augment the body, in order to reflect on how the practices of artist research can contribute to new ways of thinking about artificial intelligence engineering and how machines/robots are reading us.

Who are the team and what do they bring?

- Arthur Richards (Aerospace Engineering; Bristol Robotics Laboratory)

- Merihan Alhafnawi (FARSCOPE; Aerospace Engineering)

- Julian Hird (FARSCOPE; Aerospace Engineering)

- Katie Winkle (FARSCOPE; Aerospace Engineering)

- Joseph Daly (FARSCOPE; Aerospace Engineering)

- Mickey Li (FARSCOPE; Aerospace Engineering)

- Katy Connor (Artist, Spike Island)

- Susan Halford (Sociology, Politics and International Studies, Bristol Digital Futures Institute)