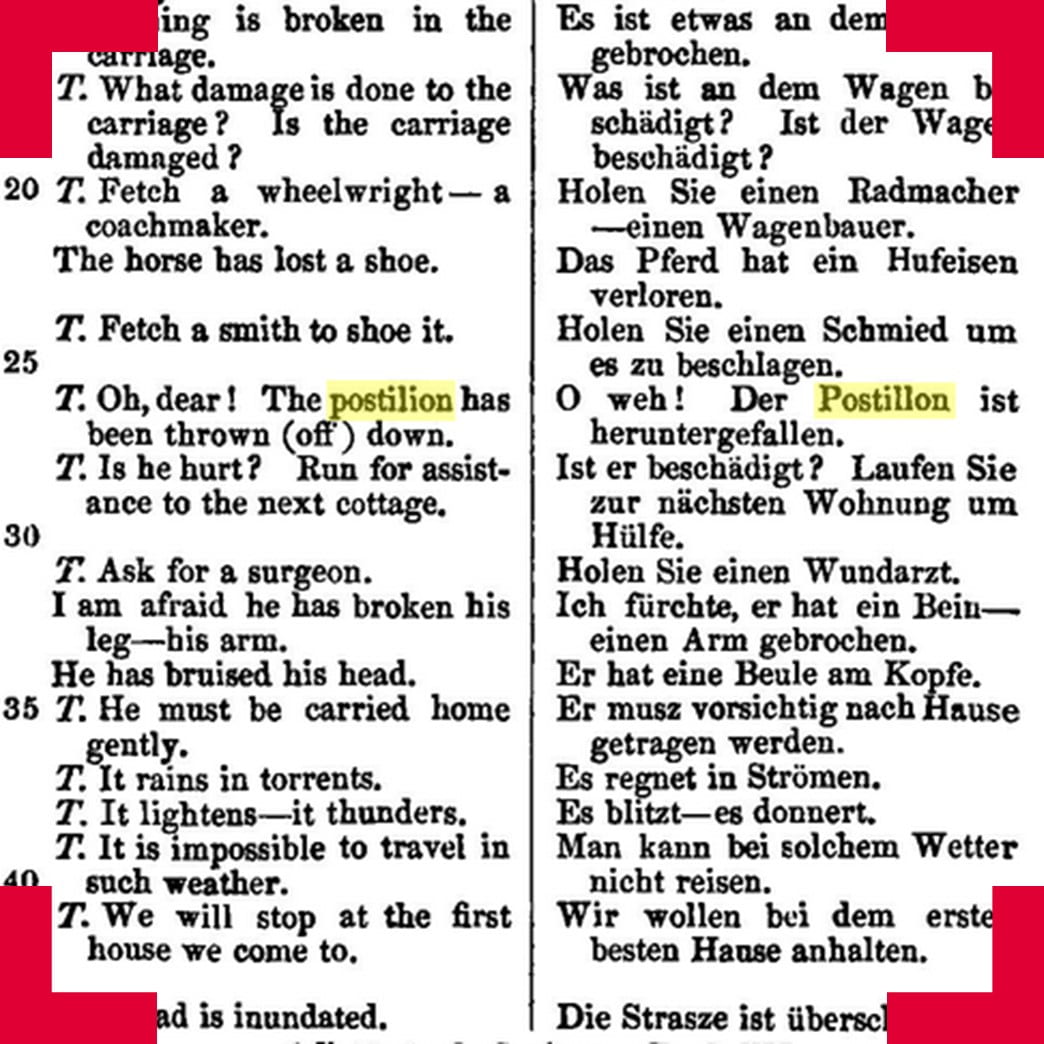

Postilion

How can we experiment with creating a repository of translated language that will be both a tool for language learners to learn and practice and for linguists to store and organise pieces of translated text?

The vast diversity of languages is an astonishing and rich part of cultural heritage. Language differences are a significant barrier to cultural diffusion. The fact language can contain and discover meaning is one of the mysteries, and one of the wonders, of the working of the brain. Teaching machines to translate between languages is an interesting and important contemporary challenge.

What did the project involve?

The aim of this project was to build a repository for a multi-language corpus of translated words, sentences and passages. Along with contributing translations to the repository, users would be able to use the site to practice and develop their language skills; this would, in turn, be used to validate and extend the corpus. The repository would be completely open, exposing not just the corpus itself, but, in a suitably anonymized form, all the interactions learners have had with the repository. It would be a tool for language learners, a resource for the study of language and a repository for languages, both common and rare. The tool would document the errors and successes that make up language acquisition and it would provide human translated texts on which to train machine translation.

Thus, the aim of this project was to create a repository of translated language. Users wishing to develop a language corpus, for example, in an endangered language they wish to help preserve or promote, would be able to upload sentences, phrases, words or passages, with, or without translations. Users wishing to practice their language skills would be presented with translation exercises; when they respond with their translation, they would be presented with the consensus translation supplied by other users, if one exists. Their own translation will be stored and may subsequently contribute to the consensus. The repository was intended to attempt to hypothesize translations using transitivity; if a sentence has been translated from French to English and from English to Irish, the repository would guess the translation from French to Irish and test it by setting the translation for any French to Irish learners.

Four summer interns were employed to work on a pilot version of the tool and to trial the creation of the repository; these interns were employed to mimic the disciplinary distribution of the three team members, so that the spirit of interdisciplinary research and building will be shared with engaged undergraduates.

Who are the team and what do they bring?

- Conor Houghton (University of Bristol) is a computational neuroscientist with an interest in learning, memory and decision making. He has considerable experience of interdisciplinary research.

- Lucas Nunes Vieira (University of Bristol) is a linguist and translation studies scholar interested in the interaction between humans and computers in language translation processes. He researches the different roles played by machine translation in human translation practices and is particularly interested in the cognitive aspects of this interaction.

- Nicolas Wu (University of Bristol) is a computer scientist who specialises in programming languages and software engineering. He has worked as a consultant in industry for projects that involve cloud-hosted web platforms, and has developed a variety of database driven applications. He has worked on several interdisciplinary projects, one concerned with the extraction of meaning from natural language using inductive logic programming.

What were the results?

This project sought to initiate and pilot a new collaboration spanning computer science, translation studies and computational neuroscience. The project raised difficult questions in machine-support corpus building: how will ambiguous translations be treated, how will successful sentences be matched transitively across multiple languages, how will the repository deal with polycentric or diglossic languages, how can data be managed to ensure that the tool runs quickly and can be scaled effectively if the tool proves popular?

The original strategy for the trained machine translation aspect of the project proved to be too ambitious. As a consequence, the project was unable to achieve a working model. However, important outcomes were achieved in clarifying the challenge of the project between two faculties. Further specialists such as an expert in endangered languages and a professional programmer were identified as necessary resources if the project was to be extended further.